Prompt Engineering Tools for Prompt Optimization

Introduction to Prompt Engineering Tools for Optimization

Prompt engineering and optimization refers to the systematic process of crafting, refining, and enhancing text instructions given to AI models to achieve desired outputs. As artificial intelligence becomes increasingly integrated into business workflows, the need for specialized tools to manage and optimize these prompts has grown significantly.

The expanding AI landscape has created a demand for dedicated solutions that help developers, content creators, and businesses harness the full potential of large language models (LLMs). These specialized tools address the complexities of prompt management, testing, and performance monitoring that are essential for building reliable AI applications.

My Take: Prompt engineering tools function as a foundational layer for managing complex prompt workflows in AI systems. As prompt logic and context depth increase, structured optimization tools become essential for maintaining consistency and reproducibility across production environments.

Understanding Prompt Optimization: Benefits and Core Concepts

Prompt optimization directly impacts AI output quality, ensuring more accurate, relevant, and useful responses from language models. By refining prompts through systematic testing and iteration, organizations can significantly improve the performance of their AI applications while reducing errors and inconsistencies.

Key metrics for measuring prompt performance include:

- Cost efficiency (token usage)

- Response latency

- Output accuracy and relevance

- Consistency across similar queries

One of the primary benefits of prompt optimization is the reduction of AI hallucinations—instances where models generate plausible but factually incorrect information. Through careful prompt design and testing, these tools help create more reliable AI systems that users can trust.

My Take: The value of prompt optimization extends beyond efficiency gains. Systematic prompt management supports clearer iteration cycles and contributes to more consistent output quality across repeated use cases.

Essential Features of Top Prompt Engineering Tools

Modern prompt engineering platforms offer several core capabilities that streamline the development and management of effective prompts:

- Prompt management and version control: These tools provide robust systems for organizing prompt libraries and tracking changes over time, ensuring teams can collaborate effectively and maintain a history of prompt evolution.

- A/B testing and experimentation: Built-in frameworks allow developers to compare different prompt variations and objectively measure their performance against defined metrics.

- Performance monitoring: Comprehensive dashboards track key metrics like latency, token usage, and output quality to identify optimization opportunities.

- Prompt playgrounds: Interactive environments enable real-time testing and side-by-side comparison of outputs, facilitating rapid iteration.

- Collaboration features: Team-oriented capabilities support multiple users working together on prompt development and refinement.

My Take: Differences between prompt engineering tools often emerge from their emphasis on analytics depth versus workflow integration. Tools that combine testing frameworks with intuitive interfaces tend to support smoother adoption as teams move beyond ad-hoc prompt experimentation.

Key Use Cases and Target Audiences

Prompt engineering tools serve diverse user groups across various industries:

- AI/ML developers and prompt engineers use these platforms to build and maintain high-performing LLM-powered applications, focusing on technical optimization and integration.

- Content creators and marketing teams leverage prompt optimizers to ensure consistent brand voice and generate high-quality content at scale, often prioritizing output consistency and style control.

- Customer support operations implement these tools to develop reliable automated response systems that maintain quality while reducing human intervention.

- RAG (Retrieval-Augmented Generation) applications benefit from optimized prompts that improve data extraction accuracy and relevance, enhancing knowledge-intensive AI systems.

The typical workflow involves creating initial prompts, testing them against real-world scenarios, analyzing performance metrics, and iteratively refining them based on feedback and results.

My Take: Different user groups prioritize different aspects of prompt engineering tools. Technical workflows benefit from deeper integration and control, while simplified interfaces and templates reduce friction for non-technical use cases.

Leading Prompt Engineering and Optimization Platforms

The prompt engineering landscape includes several notable platforms, each with distinct capabilities and focus areas:

PromptLayer

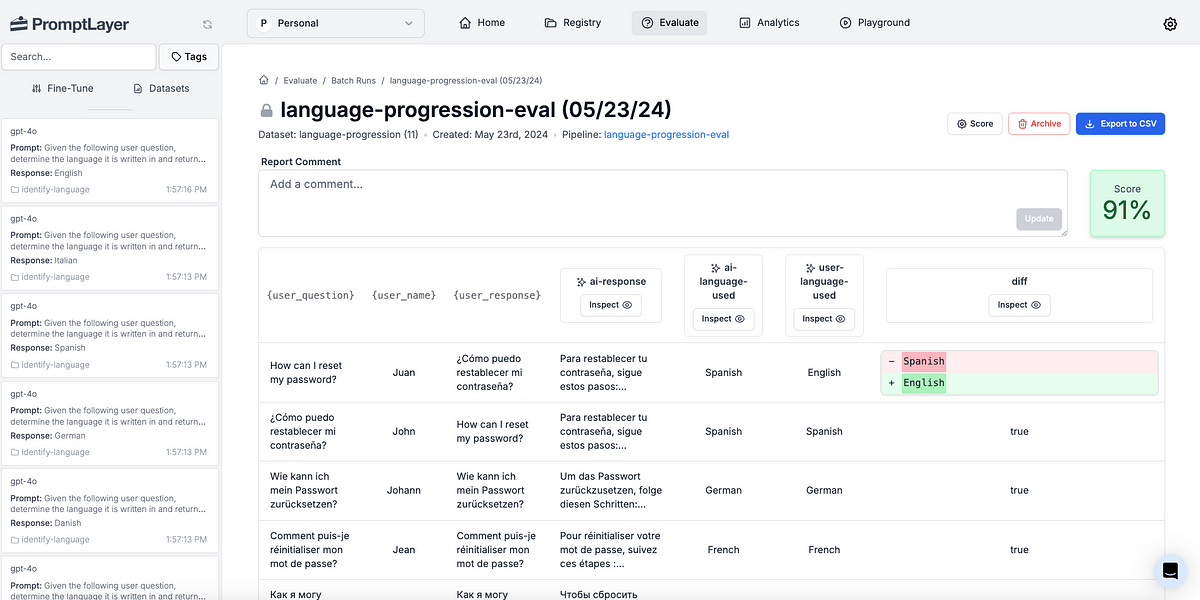

PromptLayer provides comprehensive features for prompt management, testing, and deployment. It offers robust version control and tracking capabilities, allowing teams to maintain a history of prompt evolution and performance. The platform enhances overall prompt engineering workflows through its integration with popular LLM providers.

PromptPerfect

PromptPerfect specializes in generating and refining prompts to achieve improved outcomes from models like GPT-4, ChatGPT, and Midjourney. It offers tools for prompt enhancement and optimization, focusing on maximizing the quality and relevance of AI outputs across different use cases.

Other Notable Tools

Platforms like Dust, Vellum, Helicone, and Weights & Biases each bring unique approaches to prompt optimization. Most include playground environments for real-time testing and side-by-side comparison of outputs, along with integration capabilities for various LLMs and workflows.

My Take: Prompt engineering tools continue to expand in scope through specialized features and deeper integration options. PromptLayer’s version tracking and auditability make it particularly effective for managing iterative prompt changes at scale. These structural capabilities support more controlled experimentation and long-term prompt maintenance.

How I’d Use It

If I were integrating PromptLayer into a real project, I would use it

primarily to track prompt versions and compare outputs during iteration.

The focus would be on understanding how small prompt changes affect

response consistency, clarity, and reproducibility across different use

cases.

Challenges and Limitations in Prompt Optimization

Despite their capabilities, prompt engineering tools face several inherent challenges:

- The human element remains crucial in prompt design, as even the most sophisticated tools require expert guidance to achieve optimal results.

- Tool-specific learning curves can be steep, requiring users to invest significant time in understanding interfaces and capabilities.

- Addressing model biases and inherent limitations remains challenging, as prompt optimization can mitigate but not eliminate fundamental constraints of underlying AI models.

Integration complexities can also arise when incorporating these tools into existing development workflows, particularly in organizations with established AI pipelines.

My Take: The limitations of prompt engineering tools reflect the evolving complexity of AI development. Systematic prompt testing and version control help produce more predictable and consistent outputs over time. A balanced approach that combines automation with human oversight remains essential for maintaining control over prompt behavior.

Choosing the Right Tool for Your Prompt Optimization Needs

Selecting the appropriate prompt engineering platform requires consideration of several factors:

- Team size and technical expertise

- Project complexity and specific use cases

- Existing LLM stack and integration requirements

- Budget constraints and scaling needs

Organizations should also consider future-proofing their prompt strategy by evaluating how well potential tools can adapt to evolving AI capabilities and changing business requirements.

My Take: Tool selection is closely tied to workflow maturity and integration requirements. Guided setups reduce friction for simpler use cases, while extensible architectures better support complex, multi-model environments over time.

The Future of Prompt Engineering and AI Optimization

The prompt engineering landscape continues to evolve rapidly, with several emerging trends shaping its future:

- Automated prompt generation systems that can suggest and refine prompts based on desired outcomes

- Self-improving prompts that learn from user interactions and performance data

- Increasing integration between prompt engineering and broader AI development workflows

The role of prompt engineers is also evolving, shifting from manual prompt crafting toward more strategic oversight of automated systems and complex prompt architectures.

My Take: Prompt engineering continues to shift toward higher levels of abstraction and automation. As these systems evolve, maintaining human oversight remains essential for controlling prompt behavior and managing unintended output variability.

Pricing Plans

Below is the current pricing overview for the tools mentioned above:

- PromptLayer: $50/mo, Free Plan Available

- PromptPerfect: Free Plan Available

- Dust: $29/mo

- Vellum: Free Plan Available

- Helicone: Free Plan Available

- Weights & Biases: Free Plan Available

Value for Money

When evaluating prompt engineering tools, value extends beyond the basic subscription cost. The tools offering free plans provide excellent entry points for teams beginning their prompt optimization journey, allowing them to test capabilities before committing to paid tiers. These freemium models typically offer sufficient functionality for individual developers or small teams with modest usage requirements.

For organizations scaling their AI initiatives, paid plans from platforms like PromptLayer and Dust deliver enhanced value through advanced features, higher usage limits, and dedicated support. The investment becomes particularly justified for teams working on production applications where improved prompt performance directly impacts business outcomes. The strongest value proposition comes from prompt engineering tools for prompt optimization that combine robust technical capabilities with intuitive interfaces that reduce the learning curve and accelerate team adoption.

Editor’s Summary

Prompt engineering tools for prompt optimization have emerged as essential components in the AI development ecosystem, offering structured approaches to creating, testing, and optimizing prompts for large language models. While each platform brings unique strengths to the table, all share the common goal of improving AI output quality and consistency while streamlining the development process. Teams evaluating prompt engineering tools benefit from aligning feature sets with their specific workflows and integration requirements rather than focusing solely on feature lists.